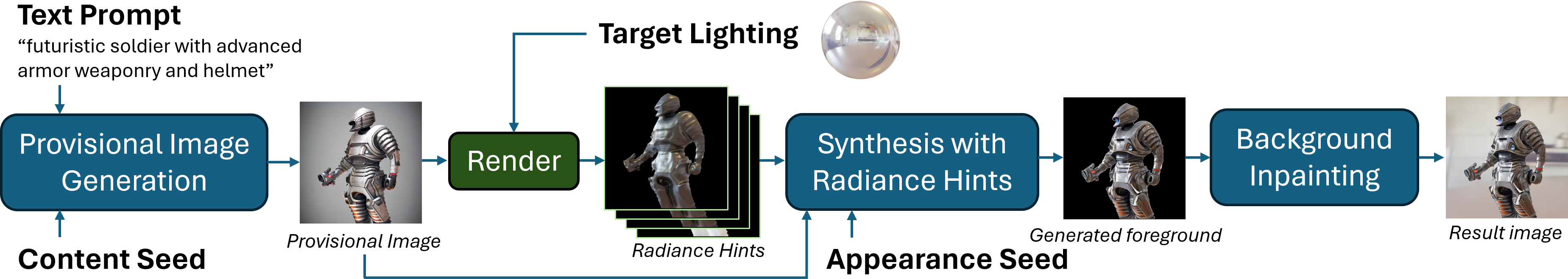

This paper presents a novel method for exerting fine-grained lighting control during text-driven diffusion-based image generation. While existing diffusion models already have the ability to generate images under any lighting condition, without additional guidance these models tend to correlate image content and lighting. Moreover, text prompts lack the necessary expressional power to describe detailed lighting setups. To provide the content creator with fine-grained control over the lighting during image generation, we augment the text-prompt with detailed lighting information in the form of radiance hints, i.e., visualizations of the scene geometry with a homogeneous canonical material under the target lighting. However, the scene geometry needed to produce the radiance hints is unknown. Our key observation is that we only need to guide the diffusion process, hence exact radiance hints are not necessary; we only need to point the diffusion model in the right direction. Based on this observation, we introduce a three stage method for controlling the lighting during image generation. In the first stage, we leverage a standard pretrained diffusion model to generate a provisional image under uncontrolled lighting. Next, in the second stage, we resynthesize and refine the foreground object in the generated image by passing the target lighting to a refined diffusion model, named DiLightNet, using radiance hints computed on a coarse shape of the foreground object inferred from the provisional image. To retain the texture details, we multiply the radiance hints with a neural encoding of the provisional synthesized image before passing it to DiLightNet. Finally, in the third stage, we resynthesize the background to be consistent with the lighting on the foreground object. We demonstrate and validate our lighting controlled diffusion model on a variety of text prompts and lighting conditions.

"rusty copper toy frog with spatially varying materials some parts are shinning other parts are rough"

"An elephant sculpted from plaster and the elephant nose is decorated with the golden texture"

"stone griffin"

"machine dragon robot in platinum"

"machine dragon robot in platinum"

"machine dragon robot in platinum"

"leather glove"

"a decorated plaster round plate with blue fine silk ribbon around it"

"a decorated plaster round plate with blue fine silk ribbon around it"

"3D animation character minimal art toy"

"steampunk space tank with delicate details"

"steampunk space tank with delicate details"

"starcraft 2 marine machine gun"

"starcraft 2 marine machine gun"

"starcraft 2 marine machine gun"

"full plate armor"

"full plate armor"

"full plate armor"

"a large colorful candle, high quality product photo"

"gorgeous ornate fountain made of marble"

"rusty sculpture of a phoenix with its head more polished yet the wings are more rusty"

@inproceedings {zeng2024dilightnet,

title = {DiLightNet: Fine-grained Lighting Control for Diffusion-based Image Generation},

author = {Chong Zeng and Yue Dong and Pieter Peers and Youkang Kong and Hongzhi Wu and Xin Tong},

booktitle = {ACM SIGGRAPH 2024 Conference Papers},

year = {2024}

}